Unlocking the 3D Future: Turning Images into 3D Models with AI

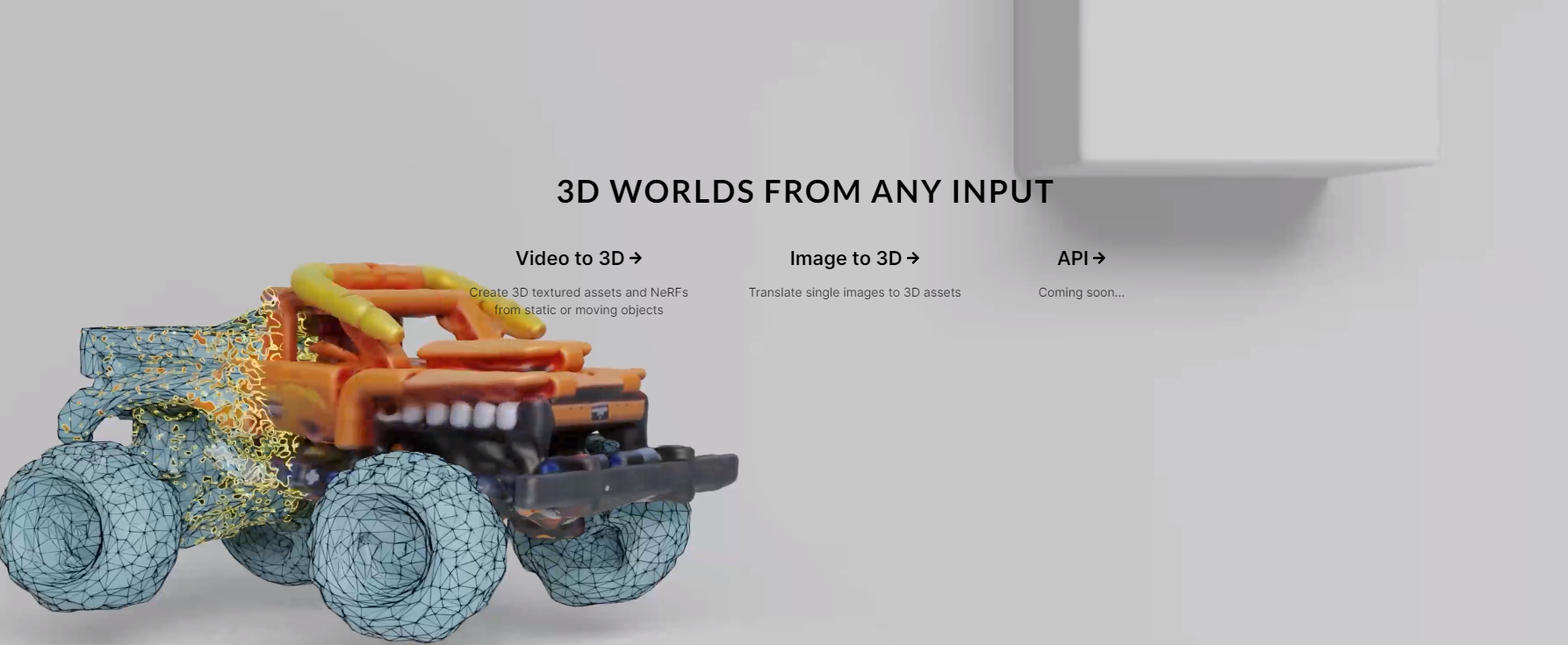

When it comes to transforming your wildest imaginations into tangible realities, AI technology has been breaking new grounds. The first-ever production AI system that can convert intricate 2D images into 3D models has been launched, effectively resolving a problem that has been an enigma for decades.

Creating 3D models from scratch has always been an incredibly complicated task, necessitating specific skills and, in many cases, days of painstaking work by professional artists and game developers. This high entry barrier meant that it was almost impossible for the average consumer to generate 3D content, and production-grade assets often required a hefty investment. Today, that's all about to change.

Welcome to the dawn of a new age, where creating 3D assets from any 2D image is no longer a distant dream, but an accessible reality.

3D Transformation for Everyone

Revolutionizing industries ranging from gaming to e-commerce, this new AI system holds the potential to transform any 2D image into game-engine ready 3D assets. In fact, the possibilities this technology opens up are only limited by one's imagination.

Imagine having an ingenious idea for a unique piece of furniture or a whimsical creature. With just a sketch or a visualized concept transformed into an image through AI tools like Midjourney, Stable Diffusion, or Dall-E, you can bring your idea to life. No need for high-level technical knowledge or advanced 3D modeling skills. Simply visualize, and let the AI create a detailed 3D model of your concept.

Exceptional Capabilities

The AI's focus is on transforming images into 3D models. Images retain more stylistic details and suffer less loss of information than text, making them more intuitive to work with and aligning seamlessly with existing workflows of concept artists in 3D content production. The process is further streamlined since creating images from text with AI models is already an established technique.

While this blog post emphasizes gaming applications, the approach is general-purpose and can scale to numerous applications. The AI's outputs outperform existing systems and function efficiently across a broad range of objects and characters. Compared to openAI's latest Shape-E model, the performance of this new AI model is significantly superior and continues to improve daily.

Artists can utilize these AI-generated models either as a 3D blockout to develop a production-ready asset or can use the outputs directly.

Rapid Progress and Wider Implications

The ability to control the entire process and develop foundation AI models in-house has enabled the rapid refinement and enhancement of the system. The problem of hallucinations, such as extra heads or parts, often associated with 3D generative models, has been substantially mitigated. This continues to improve with more compute, data, and feedback loops.

One of the longstanding dreams in generative AI is to digitally synthesize dynamic 3D environments – interactive simulations consisting of objects, spaces, and agents. These simulations enable us to create synthetic worlds and data for robotics and perception, new content for games and metaverses, and adaptive digital twins of everyday and industrial objects and spaces. The hard-to-build and limited simulators of today are set to undergo a massive transformation with generative AI.

Introducing CommonSim-1: The Future of Simulation

Common Sense Machines has built a neural simulation engine called CommonSim-1. Users and robots can interact with CommonSim-1 via natural images, actions, and text, enabling them to rapidly create digital replicas of real-world scenarios or envision entirely new ones. The development of a new class of simulator that learns to generate 3D models, long-horizon videos, and efficient actions is now within reach thanks to recent advances in neural rendering, diffusion models, and attention architectures.

CommonSim-1 is at the core of a new era in 3D modeling and simulation. By transforming the way we interact with simulators, CommonSim-1 provides a range of functions, from controllable video generation to 3D content generation, and even natural language interpretation for scenario creation. The possibilities are endless, and the implications for industries ranging from gaming to industrial design are immense.

In the end, the promise of this AI technology isn't just about making the process of creating 3D models easier. It's about democratizing creativity, making it easier for anyone to bring their imaginations to life. So, whether you're a seasoned game developer, an aspiring artist, or someone with a wild imagination, there's never been a better time to start creating. The future of 3D modeling is here, and it's yours to shape.